Route53 is a service that holds DNS records for our domain and the hosted zones. The services offered by an organization cannot be listed all in a main domain. Hence, an organization often creates subdomains to host different services.

For example, if we have example.com as a domain. Then, we can create a list of the following domains for different purposes.

support.example.com - Customer support service

blog.example.com - To host an organization blog

mail.example.com - To host email service

careers.example.com - Job page

Modern websites often use third-party services in subdomains to provide different services. Third-party services like Zendesk, JIRA, Salesforce, Gitlab, Email services, etc. can be integrated into a subdomain.

The subdomain takeover vulnerability usually occurs when the service used in our subdomain is discontinued but the DNS records remain in our route 53 records. In this case, an attacker can re-register for the service and the DNS records are already in place. This makes the service alive in the targeted subdomain and the control of the subdomain is transferred to an attacker. An attacker can host any malicious or false content after the successful subdomain takeover.

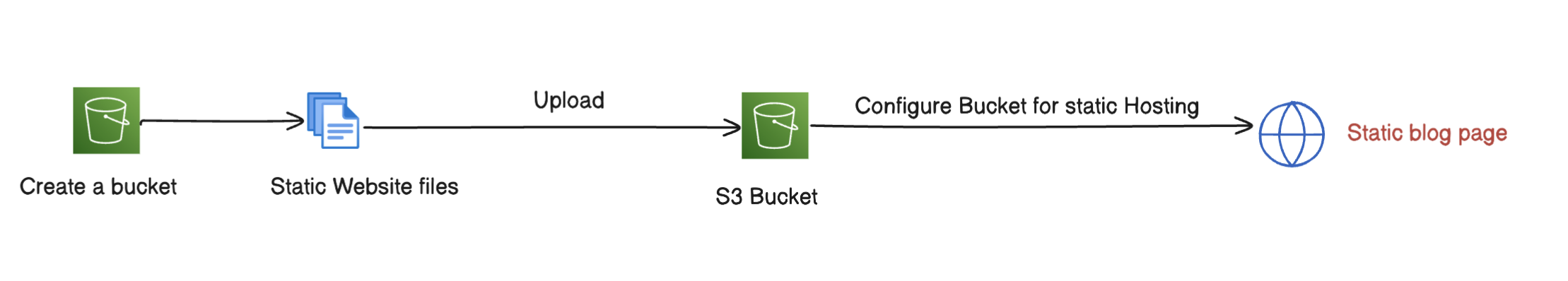

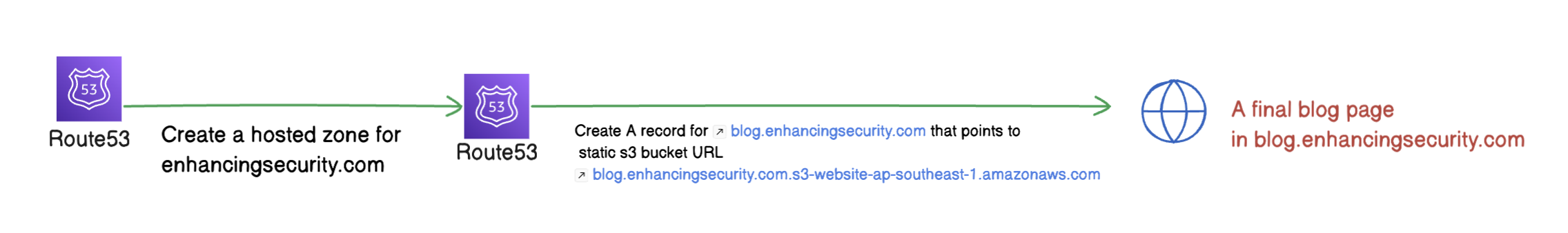

To demonstrate the subdomain takeover in Route 53 We will create a static website in the S3 bucket and then we’ll point that S3 bucket in Route 53.

Later, we’ll delete the bucket but we will not delete the Route 53 record keeping this vulnerable to an attacker. Then, we will take over the subdomain by creating the deleted bucket in our AWS account.

Part -1: Creating a Static website in the S3 bucket.

In this part, we will create an s3 bucket upload an HTML file to it and configure it for static website hosting.

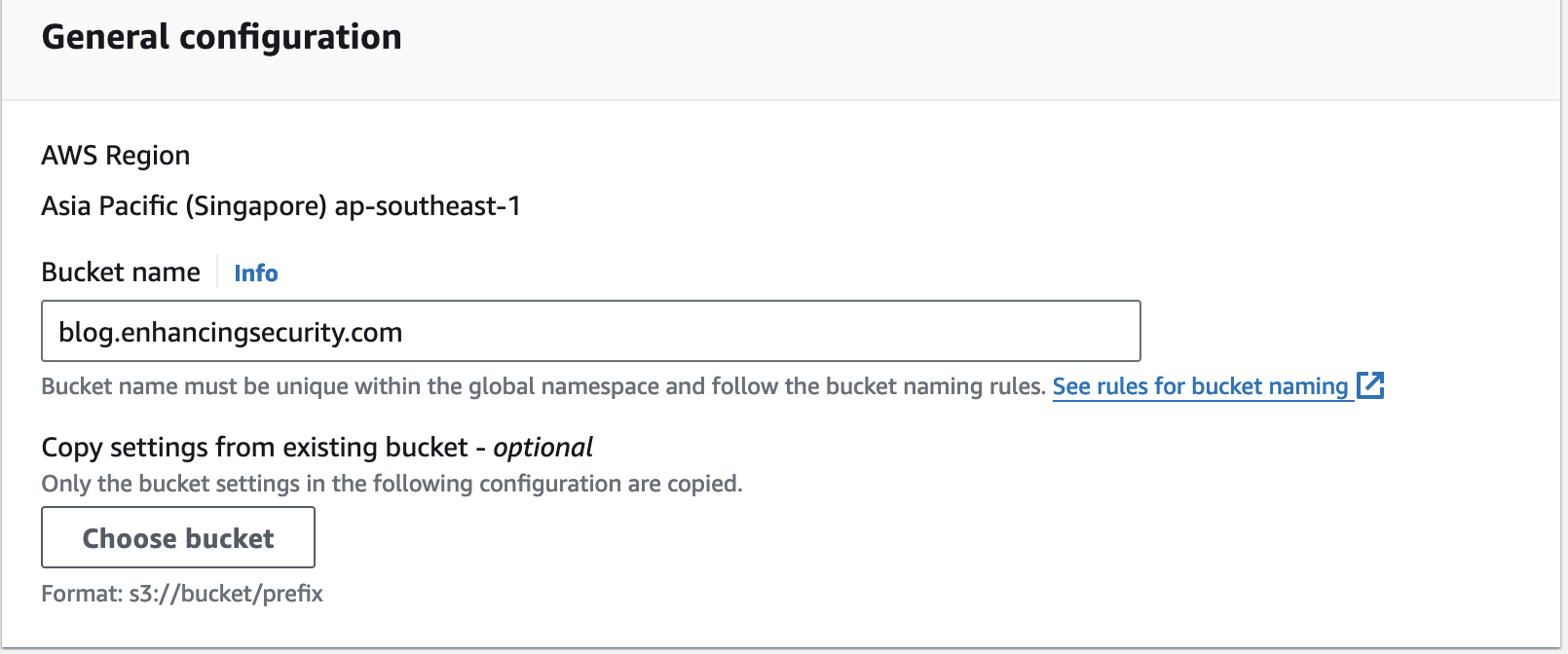

- Go S3 bucket and create a new bucket with the blog.enhancingsecurity.com

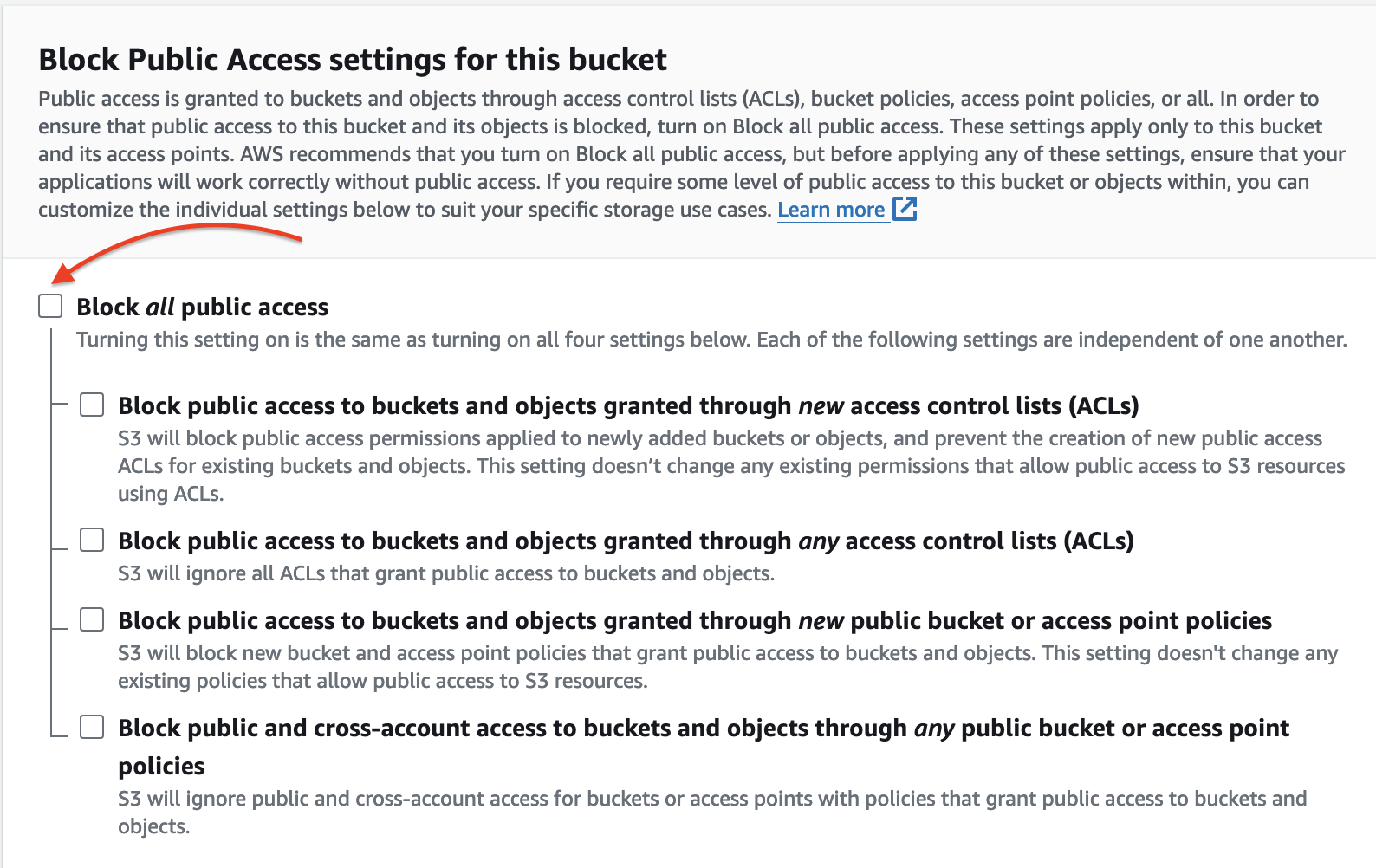

- Make sure you check “ACLs enabled” in object ownership and uncheck “Block all public access”, as this is a website and its file should be accessible to the public.

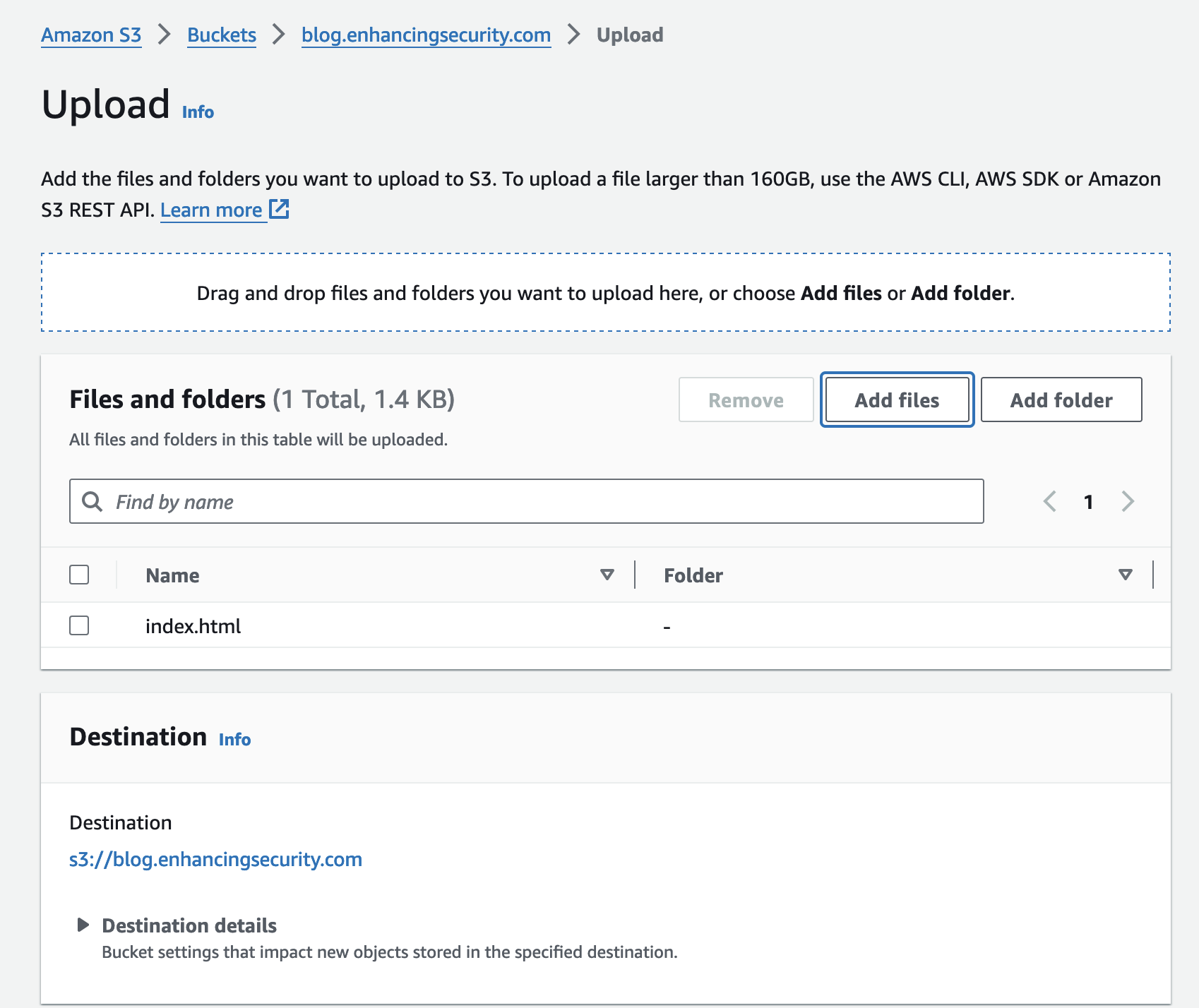

- Now, we will write a simple HTML file and upload it into the bucket.

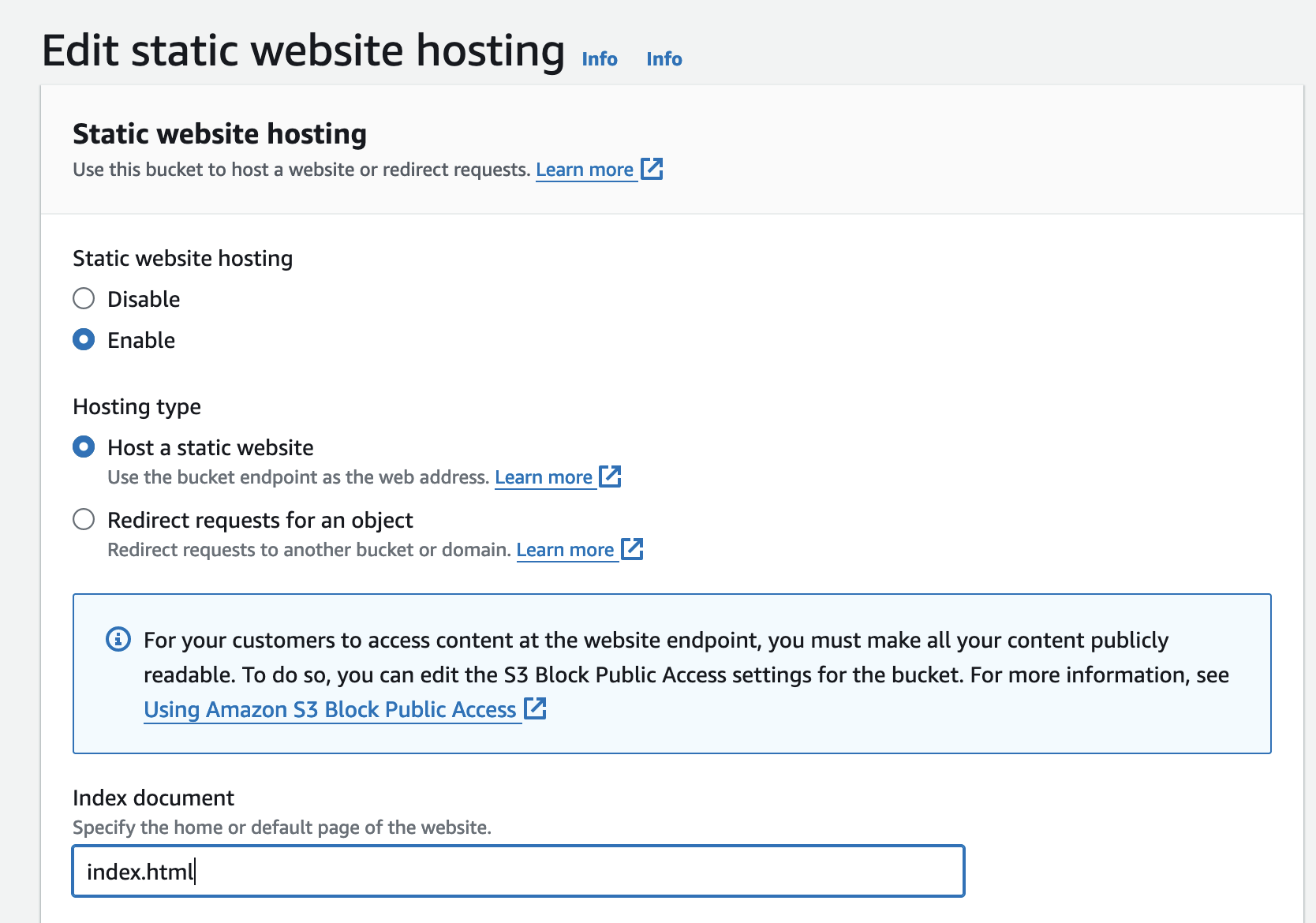

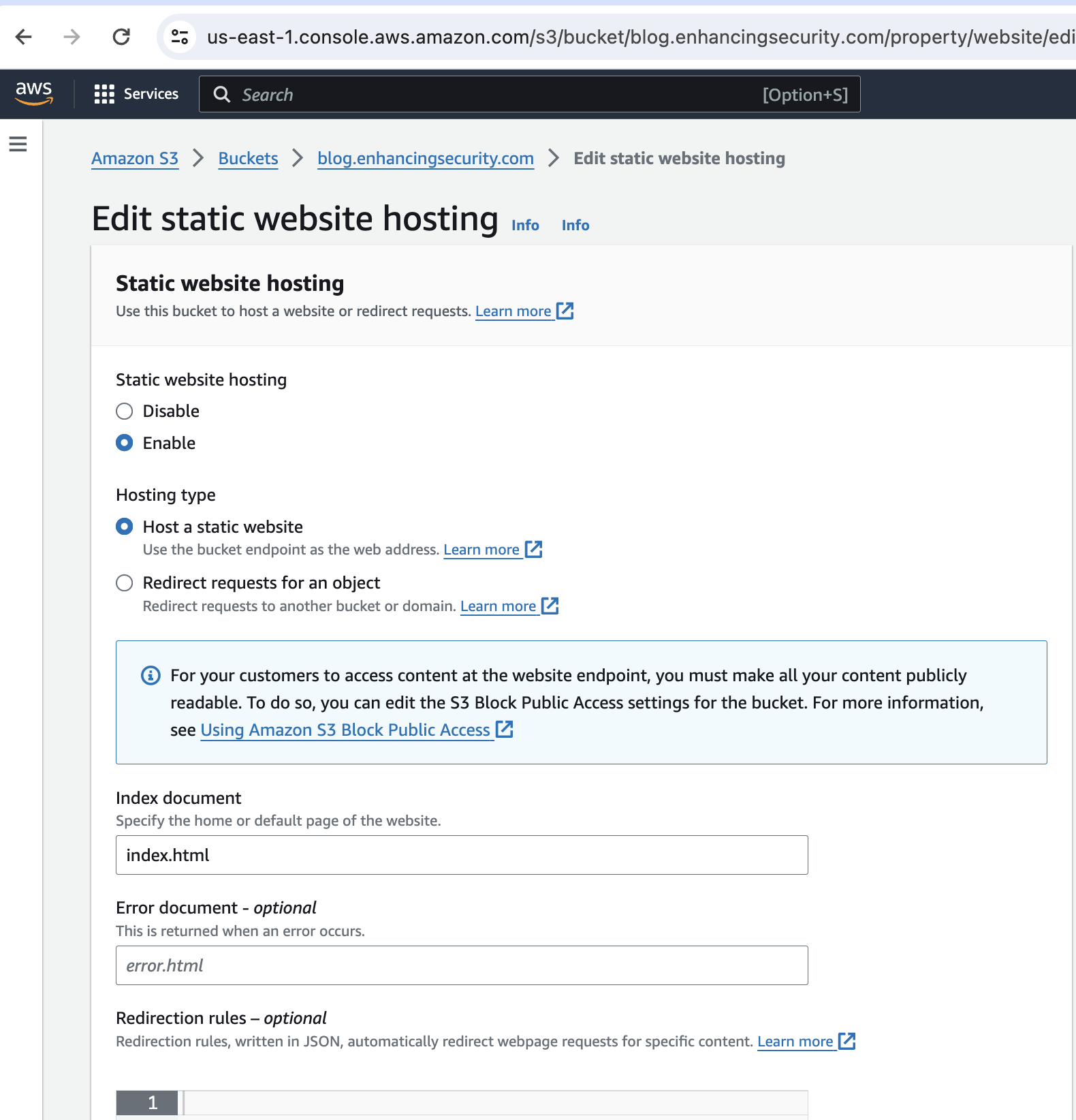

- After successful upload, Go to bucket properties and scroll to the bottom of the page. There you can see the feature of enabling “Static website hosting”. Make sure you enable this. While enabling this we need to specify the default page for our website. We will input “index.html” as the default page for our blog website.

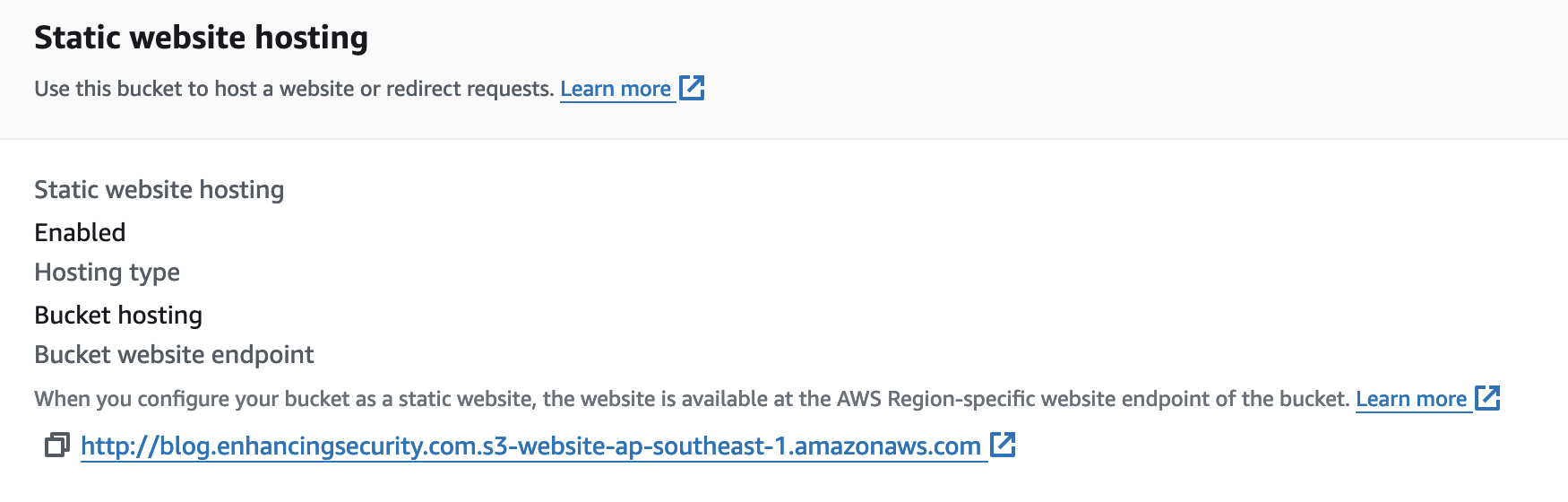

- Once the static website is created we can see our website address as shown below.

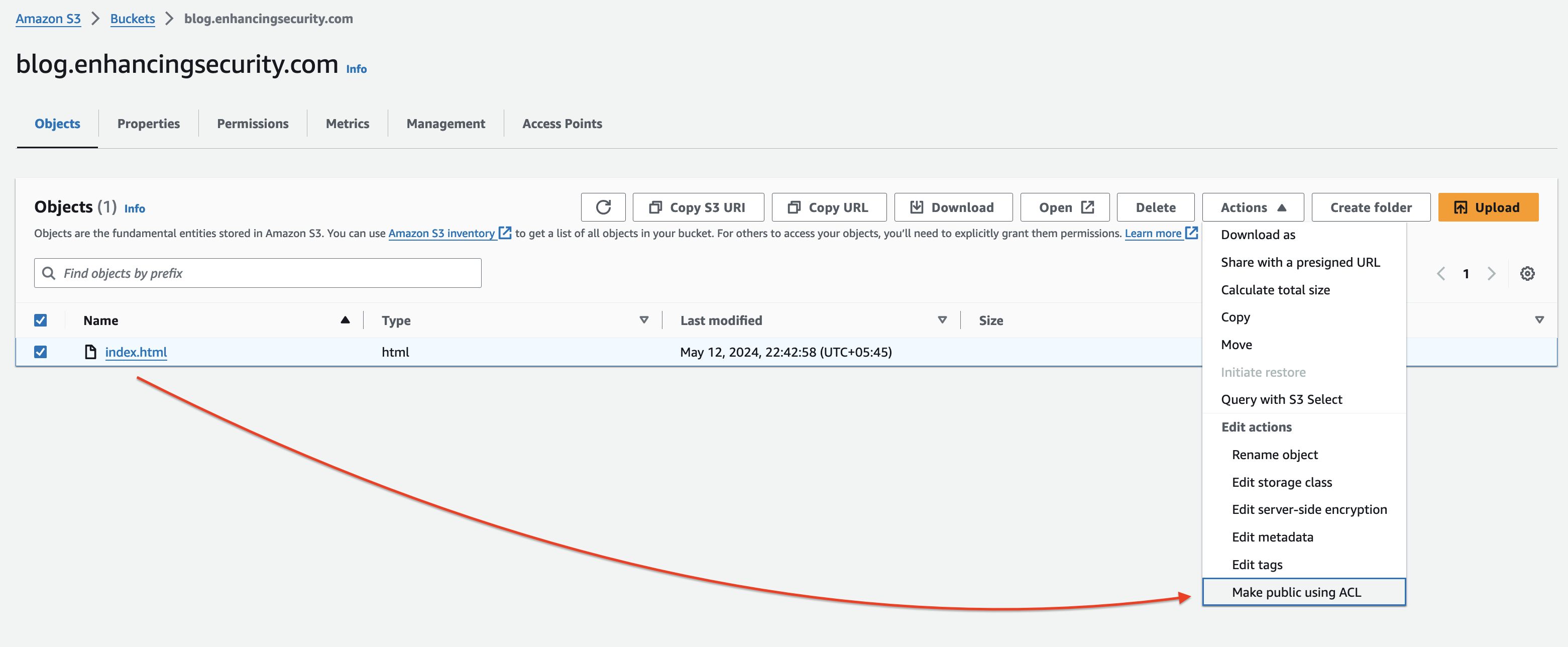

- Now, we’ll make the bucket objects public by selecting all the objects of the bucket and going to the Actions menu and selecting “Make public using ACL”

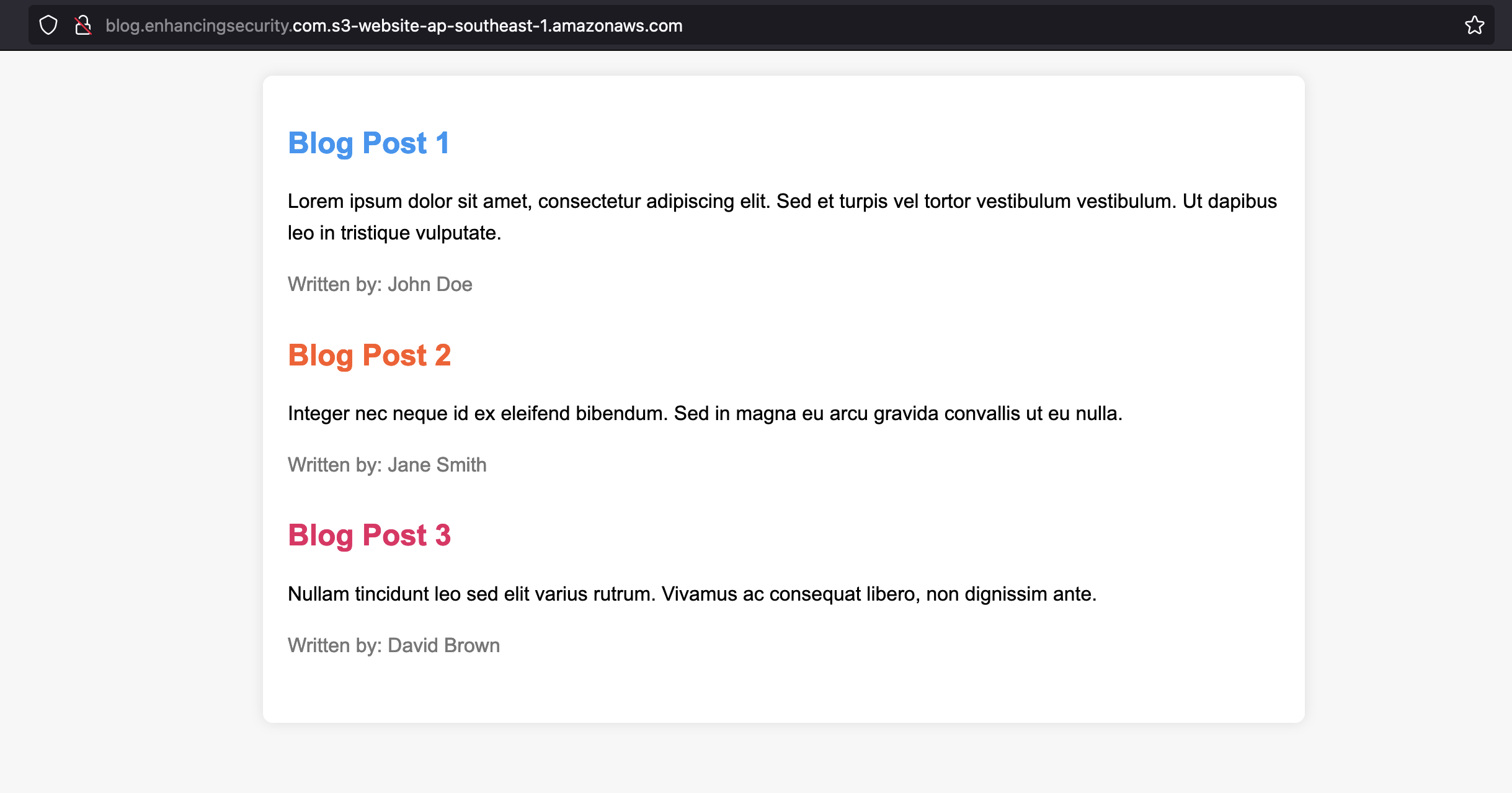

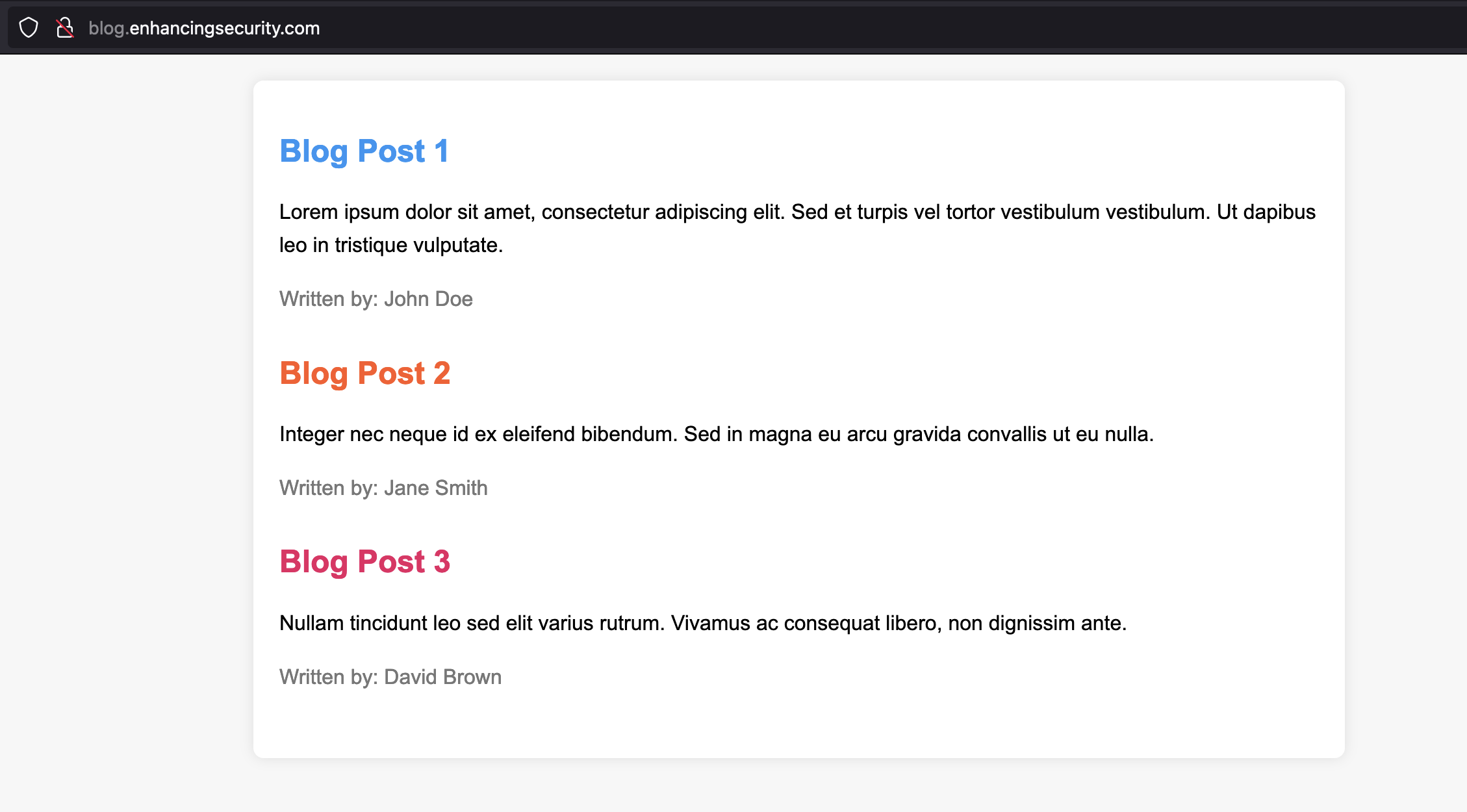

- Once we make the objects public the website should render as shown below by visiting the bucket website endpoint.

Part -2: Pointing S3 static website to a subdomain

We have successfully created a static website in the s3 bucket. The bucket website endpoint is http://blog.enhancingsecurity.com.s3-website-ap-southeast-1.amazonaws.com and now we will point this address to blog.enhancingsecurity.com so that this bucket address resolves to our subdomain.

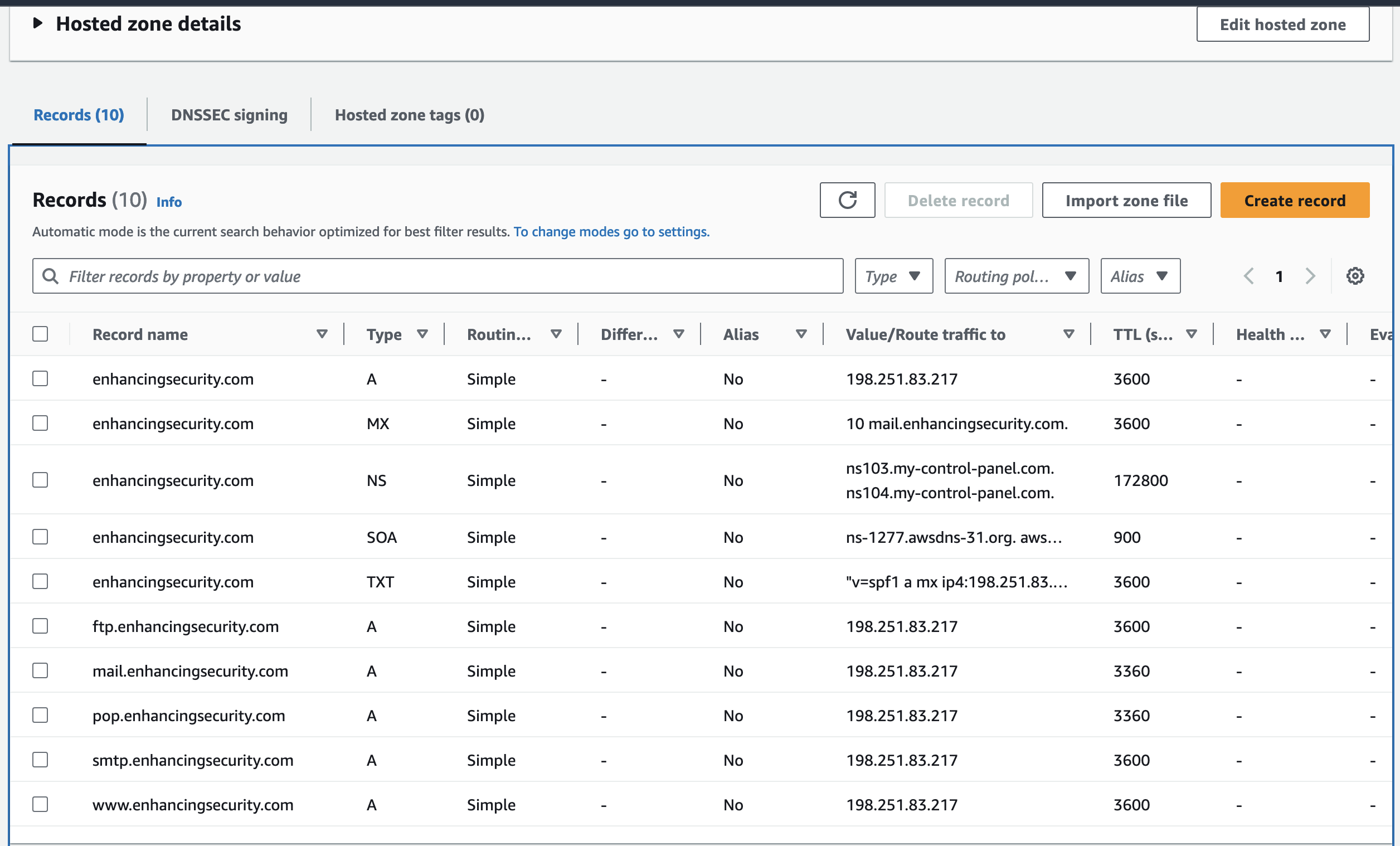

- Go to Route 53 and select a hosted zone for enhancingsecurity.com. We can see the DNS records below.

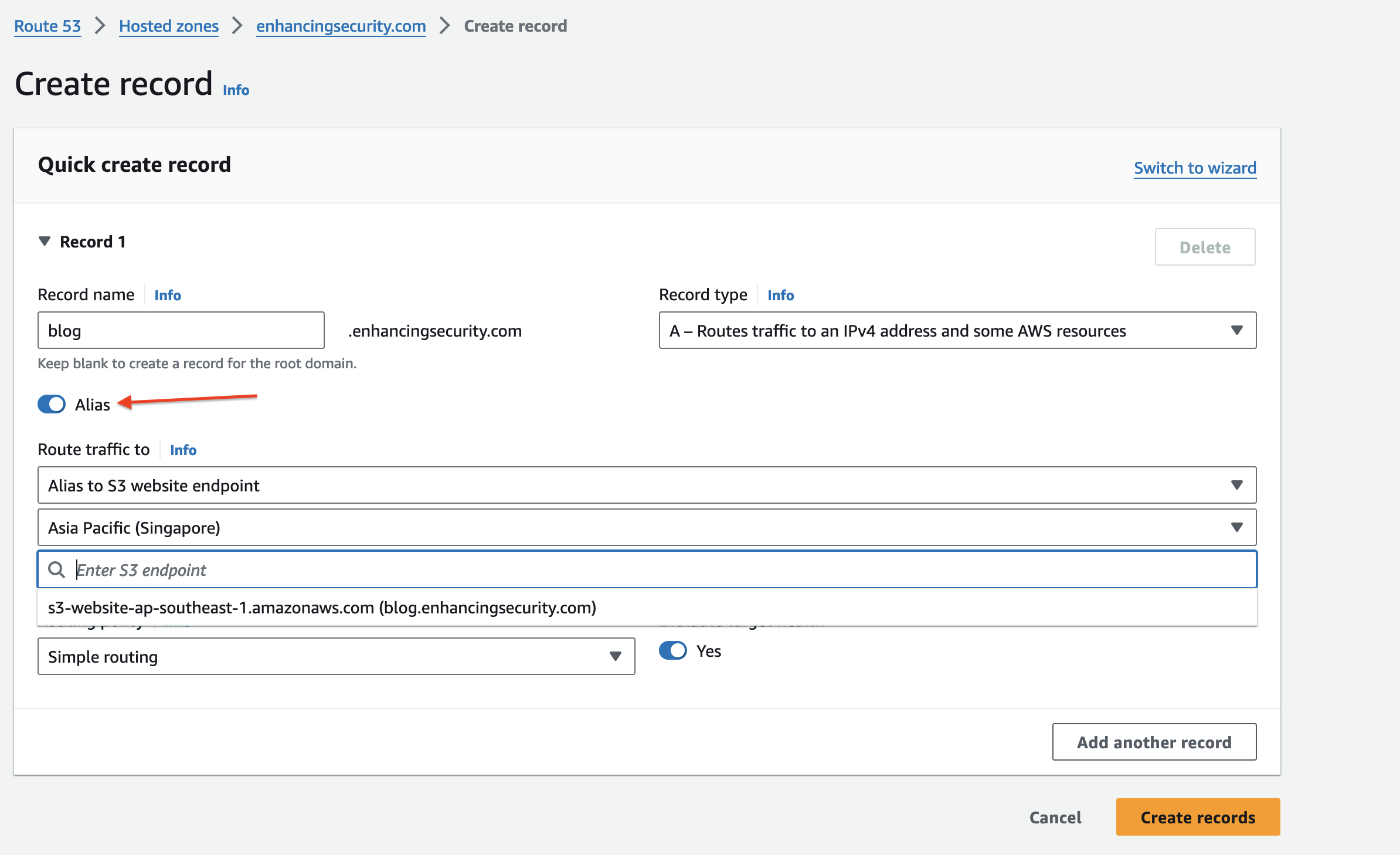

- Now we will create another subdomain with the name blog. The final subdomain will be blog.enhancingsecurity.com

- While creating a subdomain, select record type as “A record”, enable Alias and select Route traffic to Alias to S3 Website endpoint. And then select the bucket region as Singapore (we created a bucket in this region in Step 1). You’ll option to select the s3 bucket endpoint as shown below.

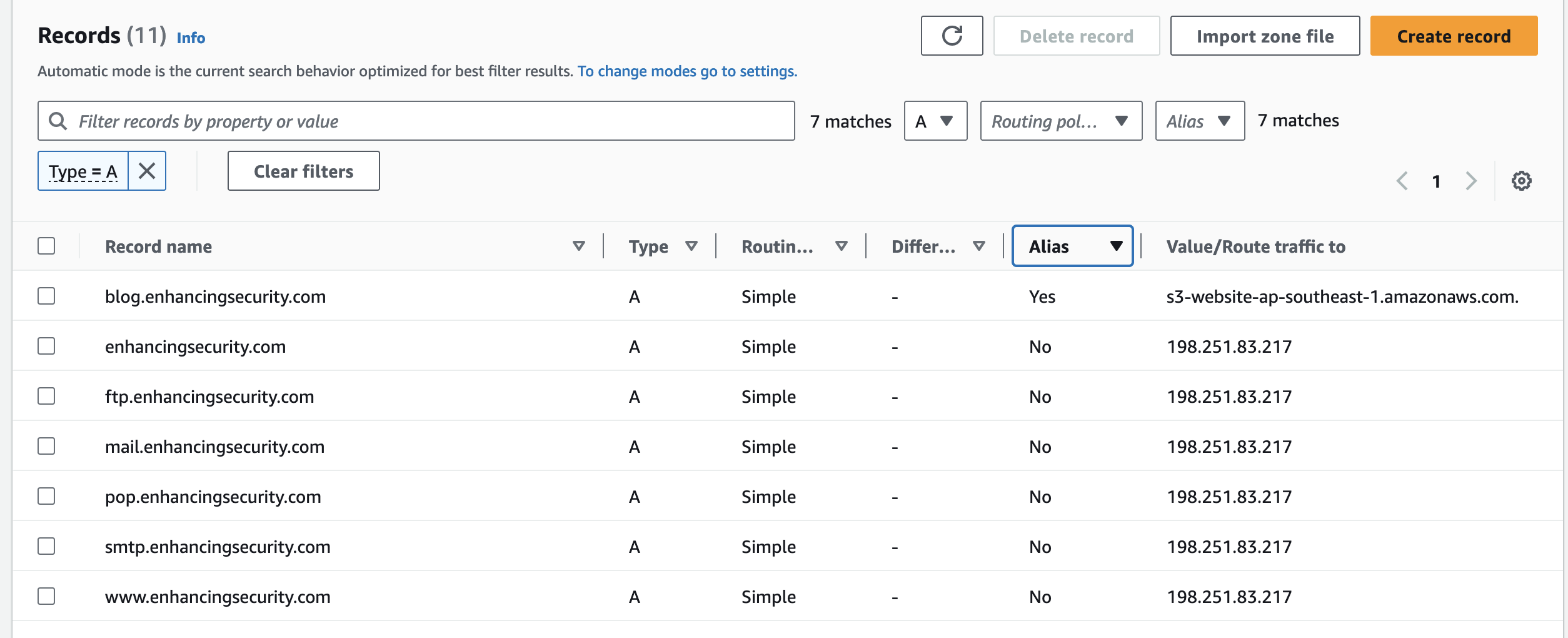

- Now we can see blog.enhancingsecurity.com pointed to s3-website-ap-southeast-1.amazonaws.com. which resolves to our static s3 website.

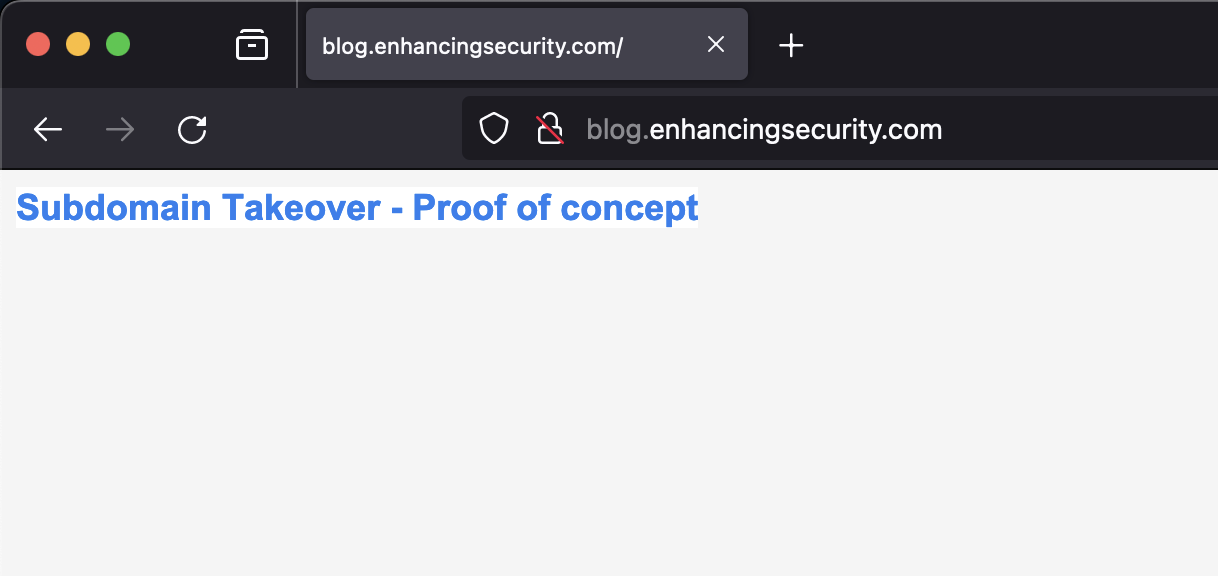

- As we can see our S3 static website content in the blog.enhancingsecurity.com

Part -3: Deleting the S3 Bucket

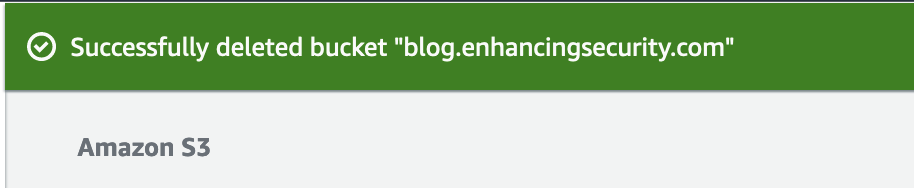

In this section now we will delete the S3 bucket where we have hosted content for our blog. To do this simply go to Amazon S3 and delete the bucket content and then delete the bucket.

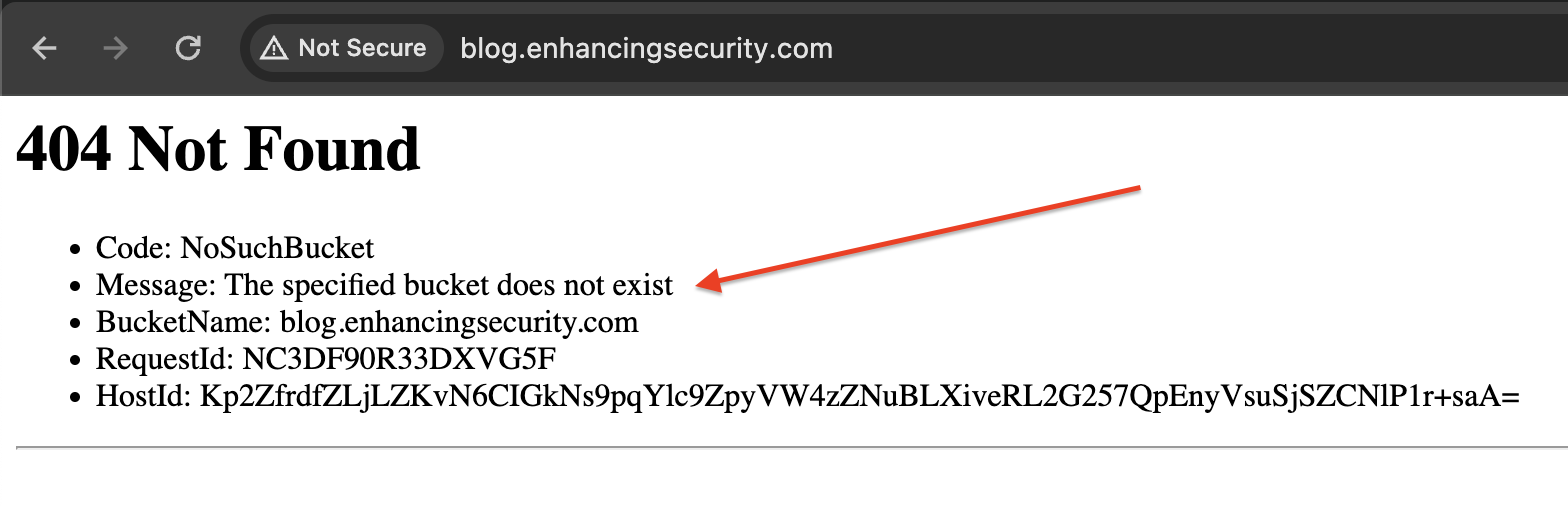

Now try to resolve the subdomain http://blog.enhancingsecurity.com/ and we can see the following error message.

The error message indicates that the bucket does not exist. This means that anyone can register the bucket with the same name and host the desired content. Now, we will do the same in part -4.

Part -4: Taking over the Subdomain

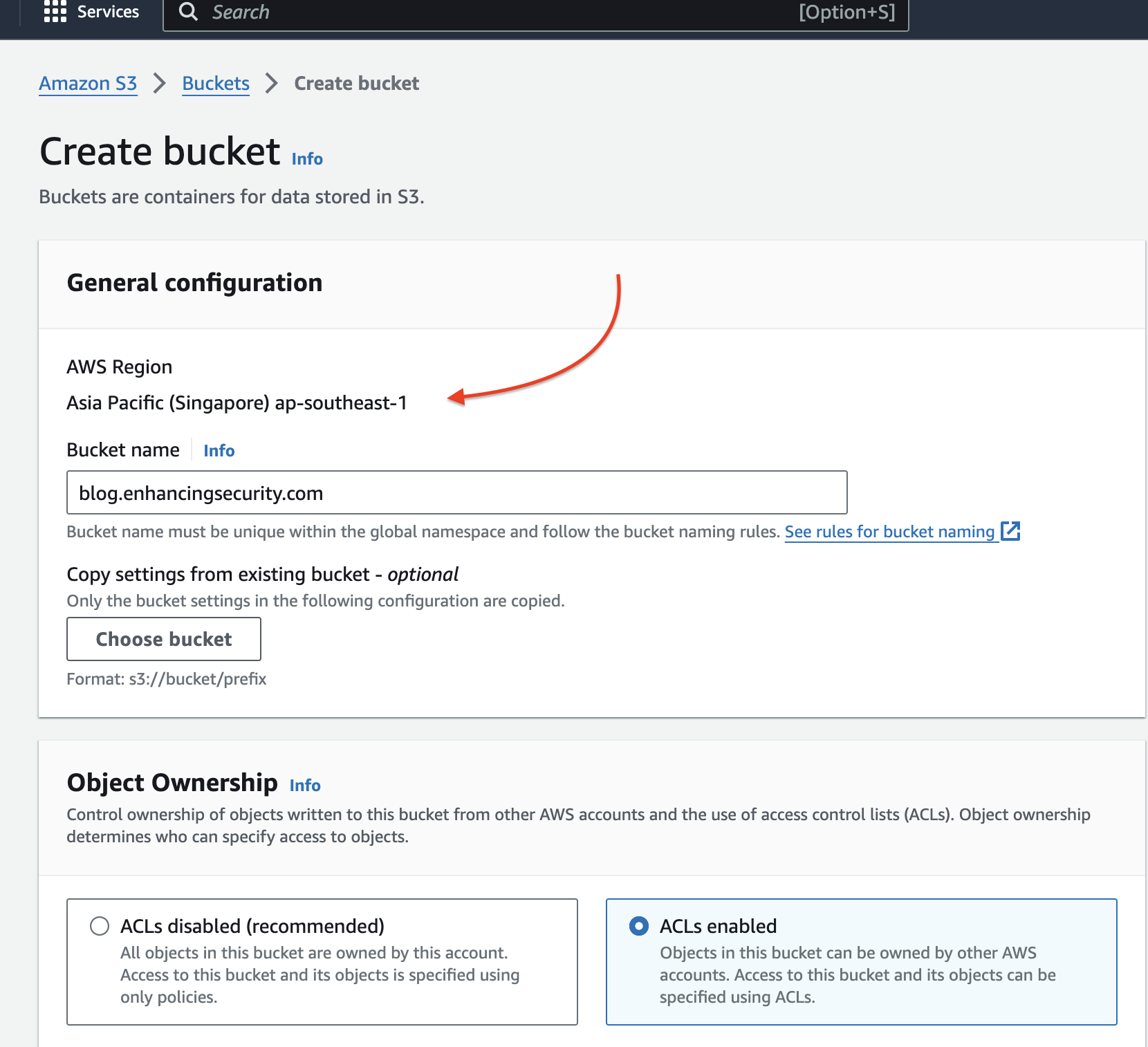

- Now we will log into another AWS account and create a s3 bucket with the same name blog.enhancingsecurity.com in the Asia Pacific (Singapore) ap-southeast-1 region.

- Like before we will upload a file and make the bucket publicly accessible and also make the object public access using ACL. Then we will upload an HTML file with our takeover message as shown below.

- Like before we are enabling the static website hosting feature and specifying the index.html file as an index document which has a subdomain takeover message.

- Now if we visit the blog.enhancingsecurity.com we can see that we can host our content on that website by creating a s3 bucket in our AWS account.

This is how we can take over the subdomain of a website if it has existing DNS records and is eligible for takeover. You refer to this GitHub repo to check which services are vulnerable to takeover and which are not. Can I Take Over XYZ

Elastic Beanstalk:

AWS Elastic Beanstalk is a service that makes it easy to deploy and scale web applications and services. If an Elastic Beanstalk environment is misconfigured or becomes unused, an attacker could potentially register the same subdomain that was previously used by the Elastic Beanstalk application. This could allow the attacker to take control of that subdomain and potentially gain access to sensitive information or resources associated with the original application.

Identifying vulnerable Elastic Beanstalk domains is quite easy. We only need to check that we get an NXDOMAIN response to our DNS query and that the pointed domain has the form

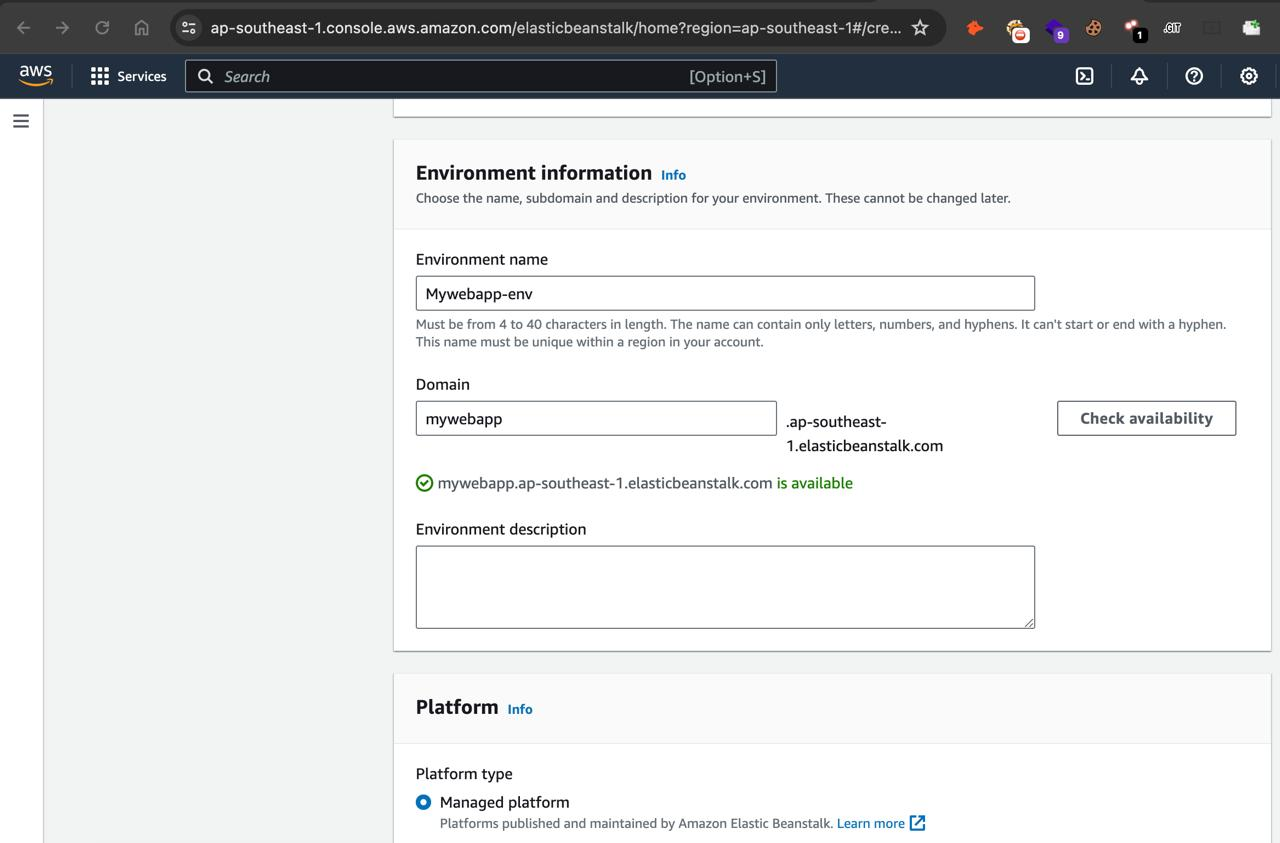

- Go to the Elastic Beanstalk service in AWS and create a new app with the following configuration.

- On the same page, we will select a basic app code to make it run. For now, we are selecting a NodeJs web application.

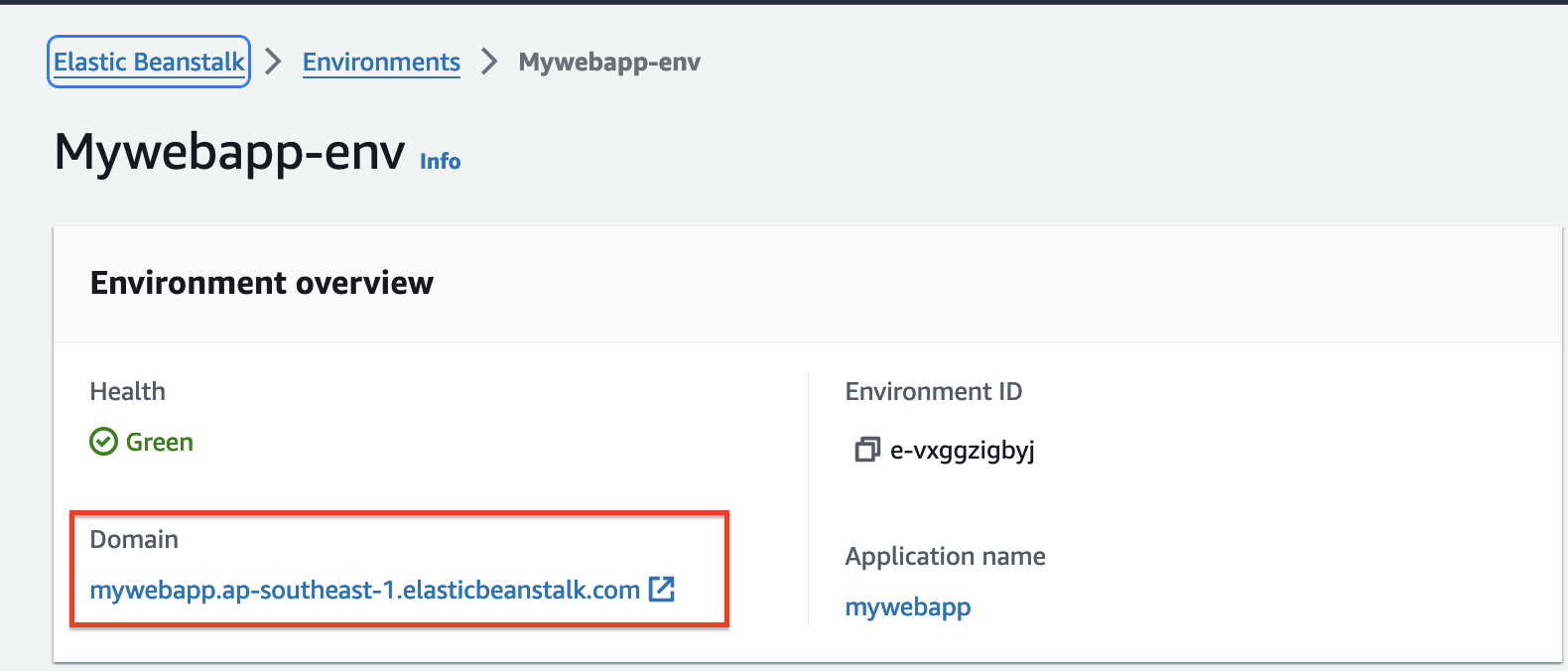

- After completing all the steps we will see our application is launching with a unique AWS Elastic Beanstalk domain as shown below.

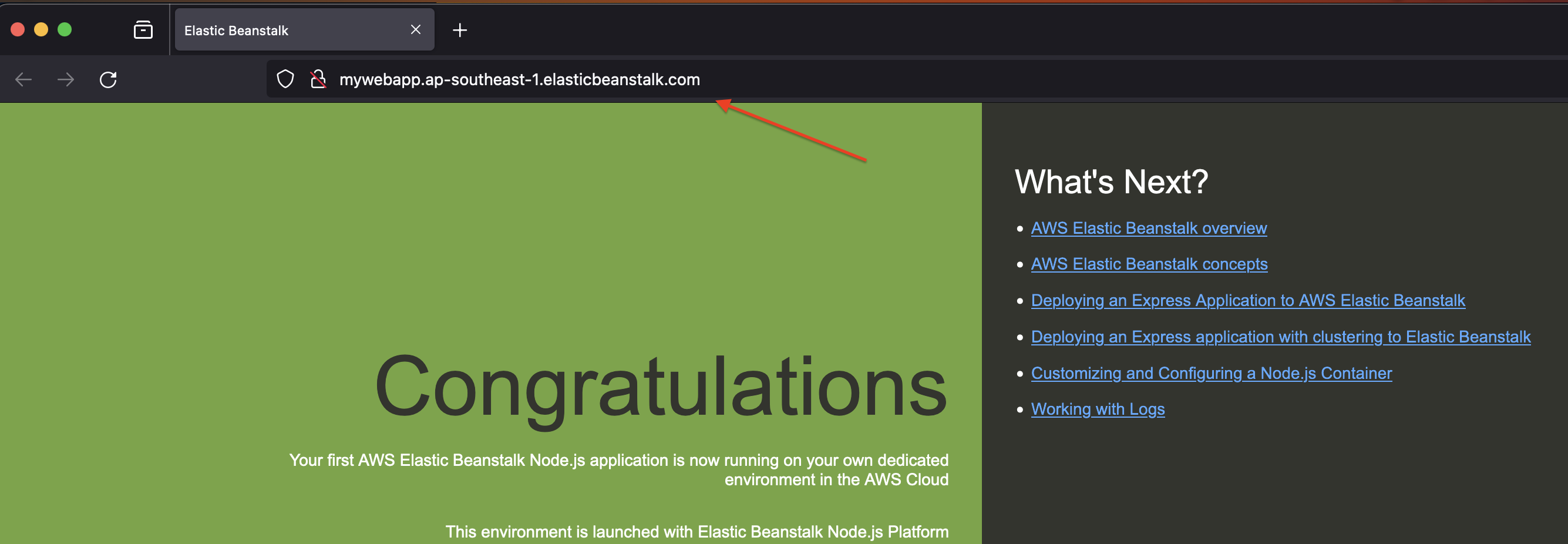

- Opening the Elastic Beanstalk domain we can see NodeJs default page below.

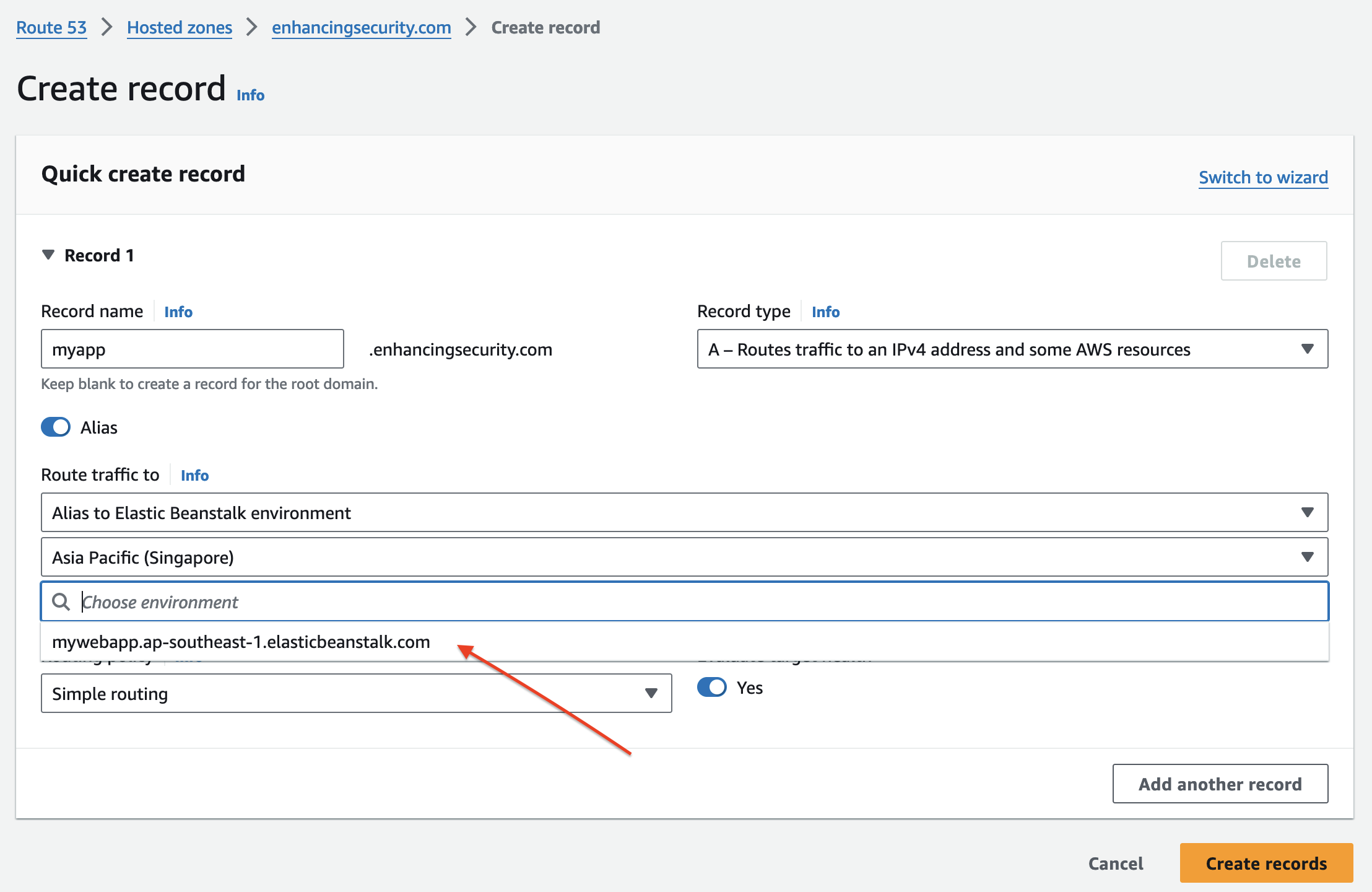

- Now we’ll point this elastic beanstalk app to route53 DNS record to point it to myapp.enhancingsecurity.com. We will create an “A record” for our subdomain that points to our created beanstalk environment.

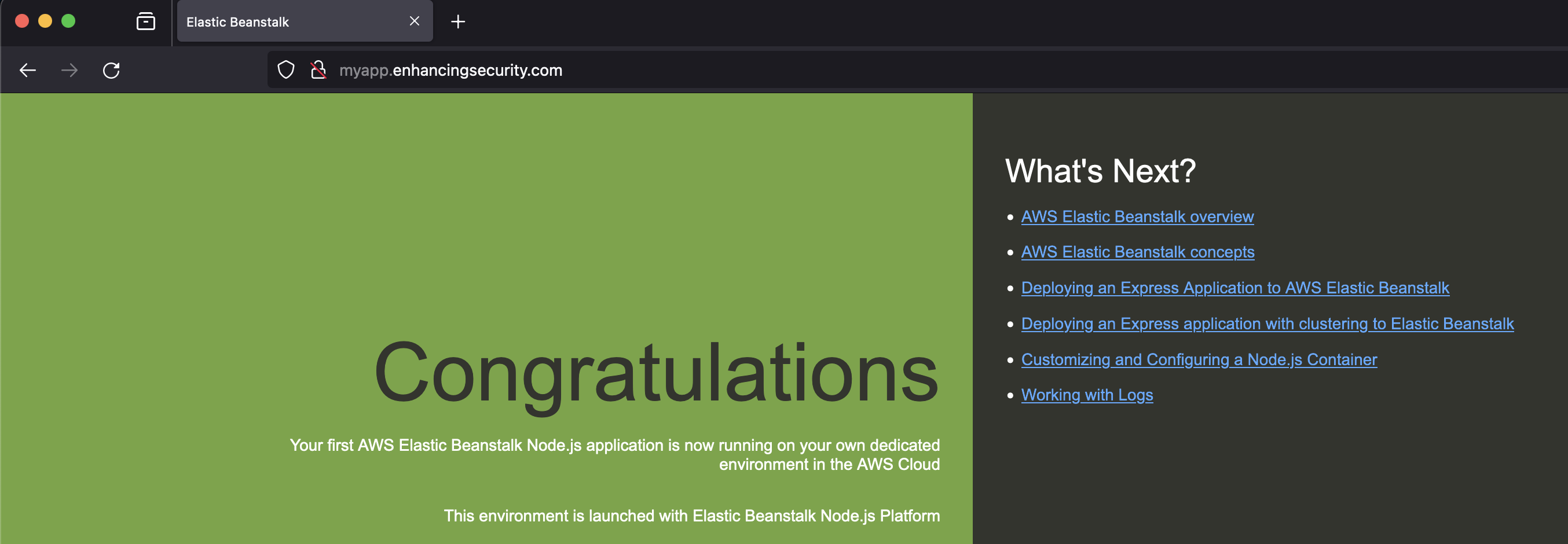

- After the successful point, we can see our default NodeJS app is loaded in myapp.enhancingsecurity.com

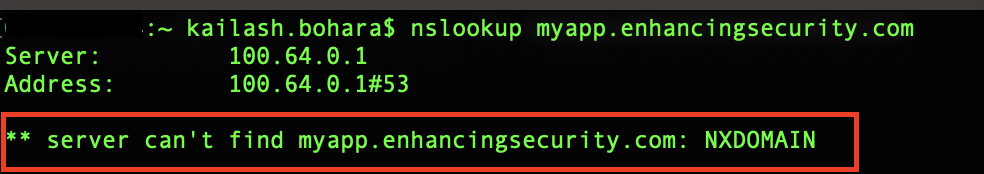

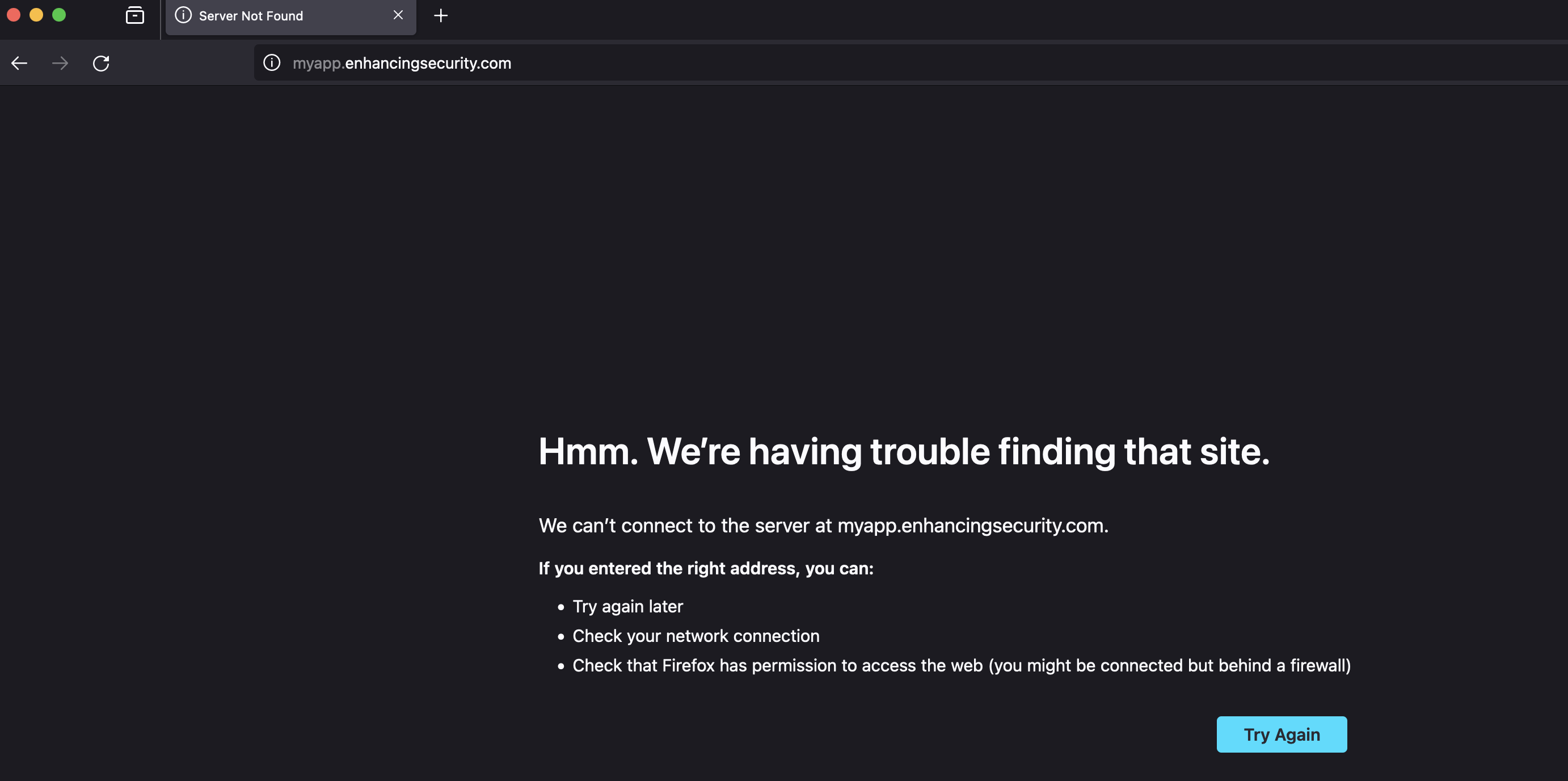

- Now we’ll delete the Beanstalk app but not the Route 53 record and check the ns record using the command

nslookup myapp.enhancingsecurity.com

If we see the message “** server can’t find myapp.enhancingsecurity.com: NXDOMAIN,” this confirms that our subdomain is vulnerable to takeover.

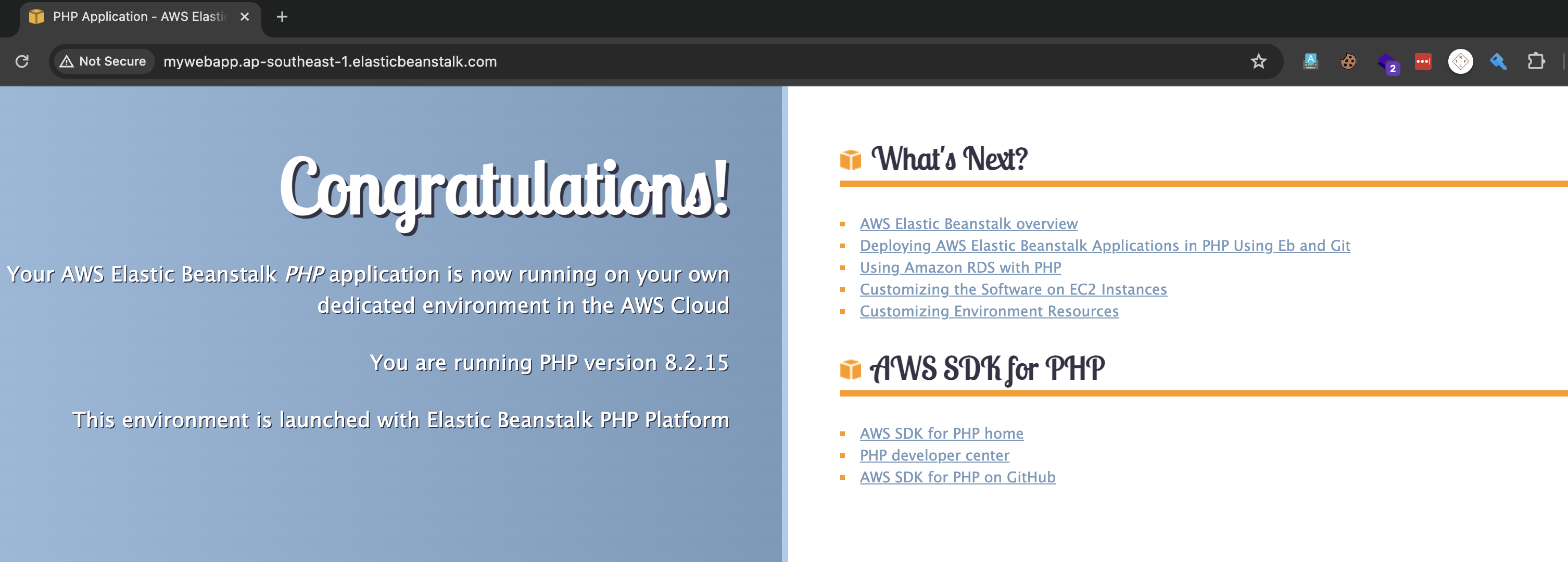

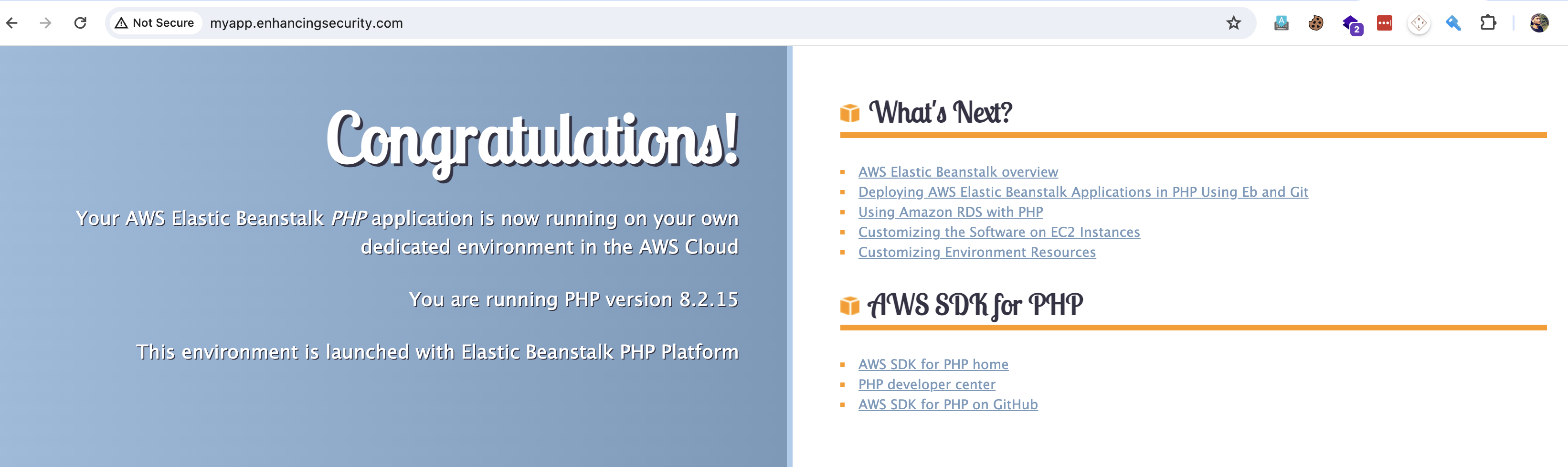

- Now, we will create the elastic beanstalk app with the same name in the same region in another AWS account. This will point our beanstalk app to the subdomain myapp.enhancingsecurity.com. This time, we are selecting a web app as PHP, and we get the beanstalk domain response as below.

- Checking the subdomain http://myapp.enhancingsecurity.com/ we get our PHP application is running.

AWS Route53 DNS Security Practices

- Periodically audit and clean up outdated or unused DNS records to reduce the attack surface.

- Enable Domain Privacy Protection in AWS Route 53 to protect domain owners’ personal information.

- Enable Auto-Renewal for a Domain. This prevents service disruptions or loss of ownership.

- Restrict access to Route 53 resources with finely tuned Identity and Access Management (IAM) policies, granting the least privilege permissions only to users and applications that need them.

- For private hosted zones, limit access to Route 53 resolvers to only trusted VPCs to protect internal DNS data.